A few years ago, Microsoft researchers and data scientists used machine learning to help marine biologists analyze patterns in underwater recordings of beluga whales.

Upon learning about the project, another group asked if it would be possible to use a similar approach to analyze audio from the Syrian war, to detect the use of weapons banned by the Geneva Conventions. The answer was yes.

That’s the kind of inspiration that Microsoft’s philanthropic AI for Good Lab hopes readers will draw from its new book, “AI for Good, Applications, in Sustainability, Humanitarian Action and Health,” to be published April 9.

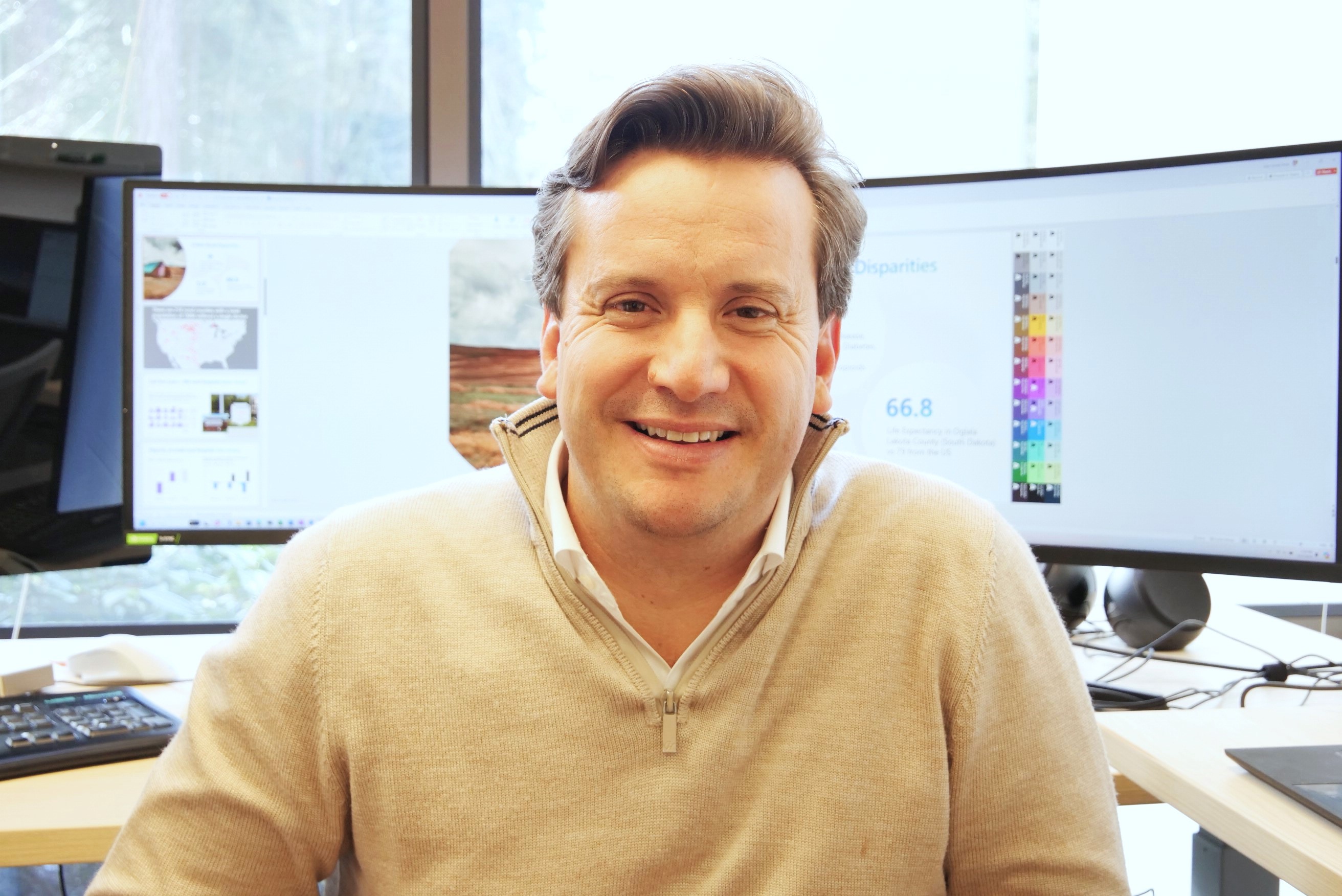

“For us, it is really important to show real-world examples of how we can use AI to solve these problems,” said Juan Lavista Ferres, Microsoft corporate vice president and chief data scientist, who directs the AI for Good Lab. The goal is for other scientists and researchers to see, in these examples, new ways to use AI to solve other societal problems.

The book offers a series of deep dives on the lab’s projects, conducted with outside researchers, non-governmental organizations, and other experts.

The case studies show the potential of AI to do good in the world, but they also give a clear-eyed and practical look at the risks and limitations.

“We need to make sure that we understand the data that we’re using,” Lavista Ferres said. “This is, for us, why it’s so critical to work with subject-matter experts, to better understand the problems that we’re trying to solve.”

In advance of the book’s release, Lavista Ferres joins us on this episode of the GeekWire Podcast for a conversation about the lab’s work, the potential for AI to bring about positive change in the world, and takeaways for the rest of us as we look to apply artificial intelligence to our daily work and lives.

Listen above, or subscribe to GeekWire in Apple Podcasts, Spotify, or wherever you listen. Continue reading for highlights, edited for clarity and length.

The potential for AI to do good in the world: “Since the inception of AI, the broad majority of the use cases for AI have significantly helped society, and we expect these to even be broader. With more capabilities, including large language models, we’re now solving problems that before we couldn’t solve. I’m extremely optimistic about the uses of AI. … I think that we’re just starting to see the type of impact we can get from AI, and I hope in the next 5-10 years, we will see much more of the impact that this technology can have on society.”

One of the keys to a successful AI for Good project: “In order for us to have an impact, we need people to be using these models in a production setting. There’s a big difference between solving a problem in theory and solving a problem in practice. It’s important to work with organizations that have not only the subject-matter expertise, but also the capabilities to put these models into production. Our job is to help them solve these problems. But their job will be to eventually work with these models in production settings. And that’s challenging.”

The lab’s work with the Carter Center: “The problem that they’re trying to solve is to have a near real-time assessment of potential conflicts around the world. They get this information from news sources, sometimes in multiple languages, across the world. Usually they would have many analysts reading this information and trying to come up with with an assessment. We took that dataset that they’ve been using, and we used natural language processing. … These models were as good as these experts on classifying whether there was a conflict or not, or what type of conflict it was, which allowed these experts to be focused on what they do best, trying to see what they could do with that data. So that has been really impactful. They have all of this in production, and we continue to collaborate with them.”

The critical role of data, and the role of open datasets: “People say data is the new oil. Data is the new code. It is clearly the most important portion of an AI model. It is really important to make sure that we don’t introduce biases in the way that we collect the data. But there’s also a significant amount of open datasets out there. And that’s something that we also contribute to society whenever we work on these projects, when possible. … We’re not alone. There’s a whole open data movement where more and more organizations are open-sourcing their datasets.”

What’s next in the adoption of AI for good: “We will see many more tools that will allow anybody to do things that before would require coding skills, and we are seeing that already. We expect this trend to continue. More and more, it will become easier for people to use this type of technology to solve their problems. … As a society, we have a responsibility to maximize the use of tools, and minimize any potential use of this technology as a weapon. It’s critical for society to work together to make sure that this technology can be used for good.”

“AI for Good: Applications, in Sustainability, Humanitarian Action and Health,” by Juan M. Lavista Ferres, William B. Weeks and researchers from Microsoft’s AI for Good Lab, will published by Wiley on April 9, 2024.

Audio editing and production by Curt Milton.

Subscribe to GeekWire in Apple Podcasts, Spotify, or wherever you listen.