Anyone who has ever tried photojournalism knows how great it feels — and how difficult it can be — to take a good picture when covering a live news event. The variables of light, positioning, gestures, facial expressions, etc., are endless.

Even when you capture the right moment, it’s common to get back to the office, import the picture onto your computer, and discover that it’s not really in focus.

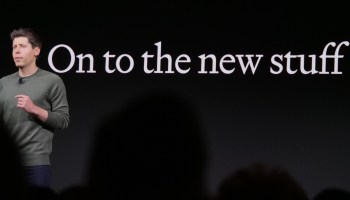

At least, that was my experience when photographing OpenAI CEO Sam Altman recently with a DSLR and telephoto lens from my seat in the press section at Microsoft’s Build developer conference in Seattle.

Many of the shots looked great on my camera, but when viewed on the unforgiving canvas of a high-definition external monitor, they weren’t nearly as crisp, despite my reliance on autofocus and the camera’s “intelligent auto” mode.

Normally, I would just live with it, and do my best to find the least-imperfect photo on my digital reel. But recently I noticed an AI photo-sharpening tool in Canva, the popular online design program. So I tried it. And I was stunned.

Check it out for yourself. Here’s the original, which was especially pixelated due to being cropped from a larger picture. (Click to enlarge the photos below.)

And here is the same photo with the AI improvements.

WOW! Amazing, right? Ain’t technology great?

I was especially impressed by the way the AI tool retained the integrity of the original, including the subtle creases in Altman’s face, and the gleam in his eye — clarifying the image without fundamentally changing the details.

Problem solved!! Well, sorta.

Over the past year or so, the GeekWire news team has settled on some basic principles of ethics and transparency when it comes to the use of AI images.

- First, we disclose whenever we use AI to generate an image that we run with an article, by saying in the caption that it was created with artificial intelligence, and noting which tool we used to make it.

- Second, we steer clear of using AI to generate photorealistic images for use in stories, sticking to AI illustrations that can’t be mistaken for actual pictures by people who don’t read the captions.

But this situation seems to be a gray area. The photo is real, but it’s AI-enhanced. This raised a couple of questions: Is it appropriate to edit a news photo in this way? And if so, should the use of AI be disclosed?

On the question of the disclosure, of course, it’s always best to err on the side of transparency. However, one concern in the back of my mind was that readers might misread a disclosure about the use of AI to mean that the image was created entirely using artificial intelligence.

GeekWire’s news team discussed it all on our internal Slack channel. One question: Would we feel compelled to disclose the use of common photo editing tools for adjusting the levels of a photo, or the contrast, etc.? Even my use of autofocus and intelligent auto could fall into this category. Disclosing all of this would seem silly.

For example, GeekWire reporter Kurt Schlosser, an accomplished photographer, pointed out that there’s all sorts of AI and similar tech already at work behind-the-scenes when we take pictures on our phones.

Disclosing something like this could create a slippery slope of also explaining, for example, when a photo was taken in portrait mode.

Another experienced photographer, podcast editor Curt Milton, offered this input.

Every digital image is processed: highlight, shadow, white balance, color balance, etc. are all set by the chips in the camera. We don’t disclose that because it’s just assumed. Photoshop has sharpening tools, but they don’t work like this AI tool does. Again, no one would feel the need to disclose they used Smart Sharpen in Photoshop.

I have always assumed that things I could have done in the enlarger don’t need to be disclosed (exposure, contrast, dodging and burning, etc.). But Photoshop now has “neural” filters that can de-age a person or extend the image or generate content (put a parrot on Sam’s shoulder), even add background blur where there wasn’t any. Those I might disclose.

Alan Boyle, GeekWire contributing editor, summed it up well: “In this new environment, the lines get fuzzy. Maybe we need to run this through an AI program to bring the lines into sharper focus.😉”

For guidance, professional journalism organizations offer ethics policies that apply to photo editing.

As one example, the National Press Photographers Association’s Code of Ethics says, “Editing should maintain the integrity of the photographic images’ content and context. Do not manipulate images or add or alter sound in any way that can mislead viewers or misrepresent subjects.”

This guideline is especially interesting when it comes to the AI editing tool, because it could be argued that the technology actually improved the integrity of the photographic image’s content and context, by making Altman appear more as he did on stage, without the pixelation.

However, guidelines established by The Associated Press are more specific and stringent, echoing some of the sentiments that Curt Milton offered above in the parallels to analog photo processing.

Minor adjustments to photos are acceptable. These include cropping, dodging and burning, conversion into grayscale, elimination of dust on camera sensors and scratches on scanned negatives or scanned prints and normal toning and color adjustments. These should be limited to those minimally necessary for clear and accurate reproduction and that restore the authentic nature of the photograph. Changes in density, contrast, color and saturation levels that substantially alter the original scene are not acceptable. Backgrounds should not be digitally blurred or eliminated by burning down or by aggressive toning. The removal of “red eye” from photographs is not permissible.

My read is that even using the AI tool would be off-limits under the AP guidelines.

In the back of my mind through all of this are professional news photographers, including the amazing photojournalists whom many of us have worked with at newspapers including the now-defunct Seattle Post-Intelligencer. They’ve spent years perfecting their craft, to be able to capture great images without AI assistance.

As a news reporter, I’m not a trained photojournalist, but I’ve marginally improved my photography skills over the years through much trial and error. In this context, using AI to fix my photographic shortcomings feels like cheating.

So what did I do? As it happened, in the midst of this discussion, I was recording an episode of the GeekWire Podcast with an AI expert, Léonard Boussioux, an assistant professor of information systems and operations management at the University of Washington’s Foster School of Business.

An avid wildlife photographer himself, he agreed that it was a gray area, and suggested a disclosure using the language that the photo was “sharpened by AI.”

In my mind, that avoided inadvertently implying that the photo was fully generated by AI. So I went with that in the caption for the photo of Altman on our colleague Lisa Stiffler’s story this week about the negotiations between OpenAI and Helion Energy, the Seattle-area fusion energy company where Altman is chair.

In the end, for me, this discussion illuminated a small slice of the vast uncharted territory we’re all going to be navigating in the years ahead. I’m amazed by the technology, and fascinated by the ethical debate it raises. And in the future, I’d rather avoid the dilemma entirely by nailing the shot in the first place.