Researchers at Microsoft, Providence Health System and the University of Washington say they’ve developed a new artificial intelligence model for diagnosing cancer, based on an analysis of more than a billion images of tissue samples from more than 30,000 patients.

The open-access model, known as Prov-GigaPath, is described in research published today by the journal Nature and is already being used in clinical applications.

“The rich data in pathology slides can, through AI tools like Prov-GigaPath, uncover novel relationships and insights that go beyond what the human eye can discern,” study co-author Carlo Bifulco, chief medical officer of Providence Genomics, said in a news release. “Recognizing the potential of this model to significantly advance cancer research and diagnostics, we felt strongly about making it widely available to benefit patients globally. It’s an honor to be part of this groundbreaking work.”

The effort to develop Prov-GigaPath used AI tools to identify patterns in 1.3 billion pathology image tiles obtained from 171,189 digital whole-slides provided by Providence. The researchers say this was the largest pre-training effort to date with whole-slide modeling — drawing upon a database five to 10 times larger than other datasets such as the The Cancer Genome Atlas.

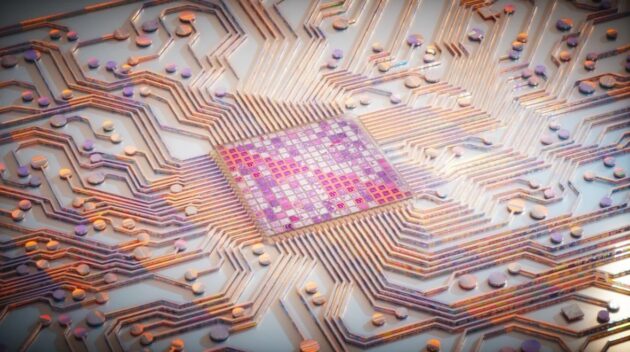

Whole-slide imaging, which transforms a microscope slide of tumor tissue into a high-resolution digital image, has become a widely used tool for digital pathology. However, one standard gigapixel slide is thousands of times larger than typical natural images — posing a challenge for conventional computer vision programs.

Microsoft’s GigaPath platform employed a set of AI-based strategies to break up the large-scale images into more manageable 256-by-256-pixel tiles and look for patterns associated with a wide range of cancer subtypes.

Another step in the process involved fine-tuning the Prov-GigaPath model by associating the image data with real-world pathology reports. The researchers used OpenAI’s GPT-3.5 generative-AI platform to “clean” the reports, removing the information that was irrelevant to cancer diagnosis.

To assess Prov-GigaPath’s performance, the researchers set up a digital pathology benchmark that included nine cancer subtyping tasks and 17 analytical tasks.

“Prov-GigaPath attains state-of-the-art performance on 25 out of 26 tasks, with significant improvement over the second-best model on 18 tasks,” two of the study’s authors at Microsoft, Hoifung Poon and Naoto Usuyama, said in a blog posting about the research.

Poon and Usuyama said the AI-assisted approach to digital pathology “opens new possibilities to advance patient care and accelerate clinical discovery” — but added that much more still remains to be done.

“Most importantly, we have yet to explore the impact of GigaPath and whole-slide pretraining in many key precision health tasks such as modeling tumor microenvironment and predicting treatment response,” they wrote.

In addition to Bifulco, Poon and Usuyama, the authors of the Nature paper, “A Whole-Slide Foundation Model for Digital Pathology From Real-World Data,” include Hanwen Xu, Jaspreet Bagga, Sheng Zhang, Rajesh Rao, Tristan Naumann, Cliff Wong, Zelalem Gero, Javier González, Yu Gu, Yanbo Xu, Mu Wei, Wenhui Wang, Shuming Ma, Furu Wei, Jianwei Yang, Chunyuan Li, Jianfeng Gao, Jaylen Rosemon, Tucker Bower, Soohee Lee, Roshanthi Weerasinghe, Bill J. Wright, Ari Robicsek, Brian Piening and Sheng Wang.